At first, I looked at how this interaction would be presented to an end user. A

very rough initial idea of a dedicated streaming device shows a small screen

with a scrolling wheel on the side, used to cycle through and select ambient

sounds to broadcast. It being a completely dedicated device would make it

useful for concentrating and assisting studying, as it does not prove distracting

notifications that an app would; however presenting it as an app (or

website)would make the interaction more accessible for users, and would cost

less for a user to use (subscribe to) and cost less to produce.

To help develop the artefact, and to consider who would use it and how, I

created rough storyboards on sticky notes. The first two focus on a uni student

called Joe and how he could use this interaction to help study for an exam; and a

man called Moe who practices mindfulness, a form of reflective meditation, and

how he might utilise this interaction. In both storyboards it is undefined if it is

presented as a dedicated device or as an app. Joe’s storyboard – focusing on

improving concentration and helping with stress – would most likely be the way

in which this interaction is used the most, whereas using the interaction for

meditating is much more niche. It is important to take into account people using

your products and designs in unforeseen and unexpected ways – during his

Skype call with the Interaction Design students, Dean Brown mentioned the

importance of allowing users to ‘personalise’ your products, and how

fascinating it is when users find functionality in your designs you didn’t

anticipate. An example of this could be people using this streaming service

in a similar way to the very popular ‘Nest Cams’, small cameras and

microphones connected to doorbells so people can see who is outside their

house without having to go to the door; a user could install their nature mic

outside their door so that they could hear who is outside at any time.

I refined the storyboard following Joe and his difficulty studying, and included

more detail.

The concept summary which has to be on our final concept summary board was

something I worked on, and reiterate repeatedly throughout the design process.

Initially, I created a list of focal points that I should cover in the summary. This

included focusing on a clear user interface, the genuine nature of the live-

streamed audio and the unique experience that each listening session will

provide, and how this would reconnect people with nature in a variety of ways. I

also considered a variety of names for my interaction, from Groovi, Flowth and

uSound to Soundsly and uFocus. After discussing my uncertainty about how to

name my interaction with Laura, she recommended using a homonym (a word

which sounds the same but has two or more different meanings). The word

stream can mean both a small water stream, and can also mean a live-stream or

broadcast. Due to this, I decided to call it Streamer, as I believe it gives the user

emotive connotations in both senses of the word.

I designed a large variety of dedicated device concepts, as initially I thought that

this would be the best way for users to stream their audio. Some of these

devices are intended to be portable, pocket sized and most of which use

alternative UI and UX designs. Other devices are more similar to smart hub

devices (such as Google Home) in size and design.

At this point in the design process I drew up a ‘punnet’ of directions I could

potentially take the design. During an interview with Dean Brown, a past

DJCAD SocDig student who was pivotal in the design of My Nature Cam, an

open-source DIY nature camera, they discussed the importance of considering

what part of the artefact records the information, and then how that information

is presented to the user. I realised that users could either be shown the ‘results’

using an app or a dedicated physical device, and the recording device (mic)

could either be microphones already placed in natural areas around Scotland, or

the users could deploy their microphones themselves.

I really liked the idea of users deploying their own microphones – this is a more

direct and physically reconnection with nature, and encourages the user to

consider their environment and think about what local natural areas mean to

them. Ideally, this will also mean that individual streams means more to users as

they personally chose where to deploy them.

Individual microphone deployment also allows for a social/peer-to-peer

functionality to be implemented. This would mean users can add each other as

friends, and share streams between one another. This means that this artefact not

only connects people and nature, but also connects people to one another.

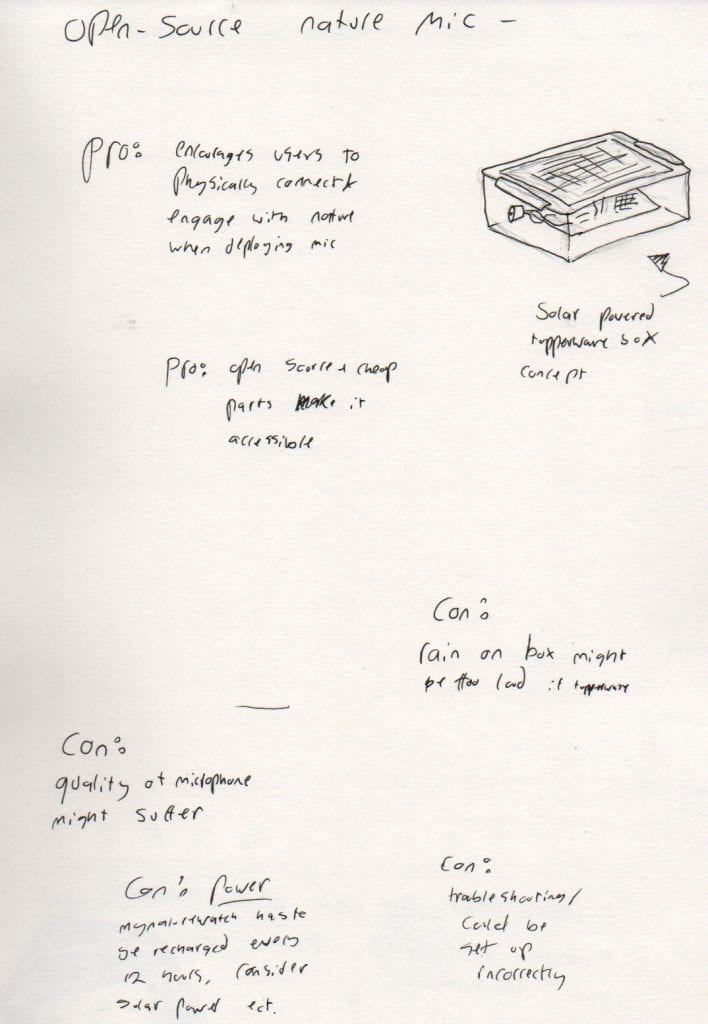

I explored the possibility of taking inspiration from the open-source design of

My Nature Cam. Although this would make the service more (increasingly)

accessible to more people, due to the low cost of materials needed, it would

make it hard for older/less tech-savvy users to utilise the artefact, and audio

quality would definitely suffer. It is a very interesting possibility

which I would’ve liked to look into more, but I decided to instead

persue designing a universal microphone with streaming capability.

I decided that the physical design of the microphone should be modular, to

allow the user the kind of personalisation that I previously mentioned. This

would allow users to attach portable batteries, solar cells or even use mains

power to charge their microphone, depending on how they intent (intend) to

utilise it.

I also took a lot of time to consider the design of the app. I used Adobe XD, an

app prototyping tool, to create the layout and interactive prototype.

I adjusted the size and weight of my typeface to create a consistent hierarchy.

I also took time to consider a colour scheme across the application prototype

and integrated this using XD’s Assets tool.

I also designed a very rough logo for my app. I wanted to enforce the

connotation of nature that comes with the word stream. I used two simple shapes to create a forced perspective of a winding stream between two faraway

mountains. This logo would be on the App icon, and would greet

the user when they open the app (as seen in the app recording).

The experience prototype was probably the hardest part of the design to

organise and create.

Marion Buchena and Jane Fulton Suri state: “By the term

Experience Prototype we mean to emphasize the experiential aspect of

whatever representations are needed to successfully relive or convey an

experience with a product, space or system. So, for an operational definition we

can say an Experience Prototype is any kind of representation, in any medium,

that is designed to understand, explore or communicate what it might be like to

engage with the product, space or system we are designing.”

I tried to refer to Bechena and Fulton Suri’s research as much as possible

throughout this process. As our brief is prompting us to design a solution to the

nature disconnect, I wanted to use experience prototyping to focus on not only

how the interaction would be used, but I was interested in how I could facilitate

and encourage this re-connection.

In their research, Bechena and Fulton Suri continue, “With respect to

prototyping, our understanding of experiance; is close to what Houde and Hill

call the look and feel of a product or system, that is the concrete sensory

experience of using an artifact — what the user looks at, feels and hears while

using it. But experience goes beyond the "concrete sensory." Inevitably we

find ourselves asking questions about the "role" which Houde and Hill define as

the functions that an artefact serves in a user’s life — the way in which it is

useful to them. And even more than this, when we consider experience we

must be aware of the important influences of contextual factors, such as social

circumstances, time pressures, environmental conditions, etc.”

A lot of the design behind my experience prototype reflects on my

understanding of this distinction between the ‘role’ and ‘look and feel’ of the

artefact.

I wanted my experience prototype to be composed of an interactive app

prototype I created using XD, where a user could see the visual style and

interact with the pdf file, and the app would play pre-recorded audio from a natural area.

Unfortunately Adobe XD currently doesn’t support audio, so I was unable to

achieve this. Instead I had to split the experience prototype into two parts-

specifically addressing the look & feel and the role of the interaction. The XD

prototype app addresses the look & feel focus of the experience prototype, the

prototype can either be interacted with as a XD file, or a prerecorded video can play. The link to this video is in the reflective essay and videos blog post. To address the ‘role’ of this

artefact I made a simple interactive PDF file with an interactive “tile”, or

button, that plays a 15 minute loop of natural sounds I recorded at the Dundee

Uni Botanic Gardens.